Making Fog With Your Bare Hands

We finally have smooth fog!!

In this post I want to retrace my implementation journey. From total graphics programming noob to ... novice that writes simple shaders, batches draw-calls and generally abuses graphics APIs.

At the beginning of this journey, I know nothing about shaders. All I know is the SDL2 canvas interface: Copying textures, drawing shapes.

What is Fog about?

At the core, HyperCoven is tiled hexagonally. Or, if you will, it’s just staggered rectangles. Or isometric tiles. Whichever way you slice it. Anyhow the status of "area visibility" is computed on this structure.

Now for each tile, we would like to convey on screen whether it is visible to our units. Of those that are not, we say they lie "in the Fog of War."

Traditionally, a kind of grey colour is drawn on the terrain to express this. Meanwhile terrain which has not been discovered at all is hidden under plain black.

There are two simple properties we expect of fog visually.

- It should be a quite regular indistinct mass (no patterns inside it)

- It should be denser on the inside than on its edges

There were two constraints I set for my implementation.

- Should work without an intermediate texture

- Should work without explicit awareness of neighbouring tiles

My reasoning for these constraints is mostly laziness, vague performance considerations, and finally a hunch that a simple ("elegant") solution should be possible.

Such I set on my quest.

Textures

My initial idea is simple: For each tile, paint a "foggy" texture with some transparency. Paint the texture twice as large as the tile. The inside of fog will have twice the texture, making it thicker than the edges.

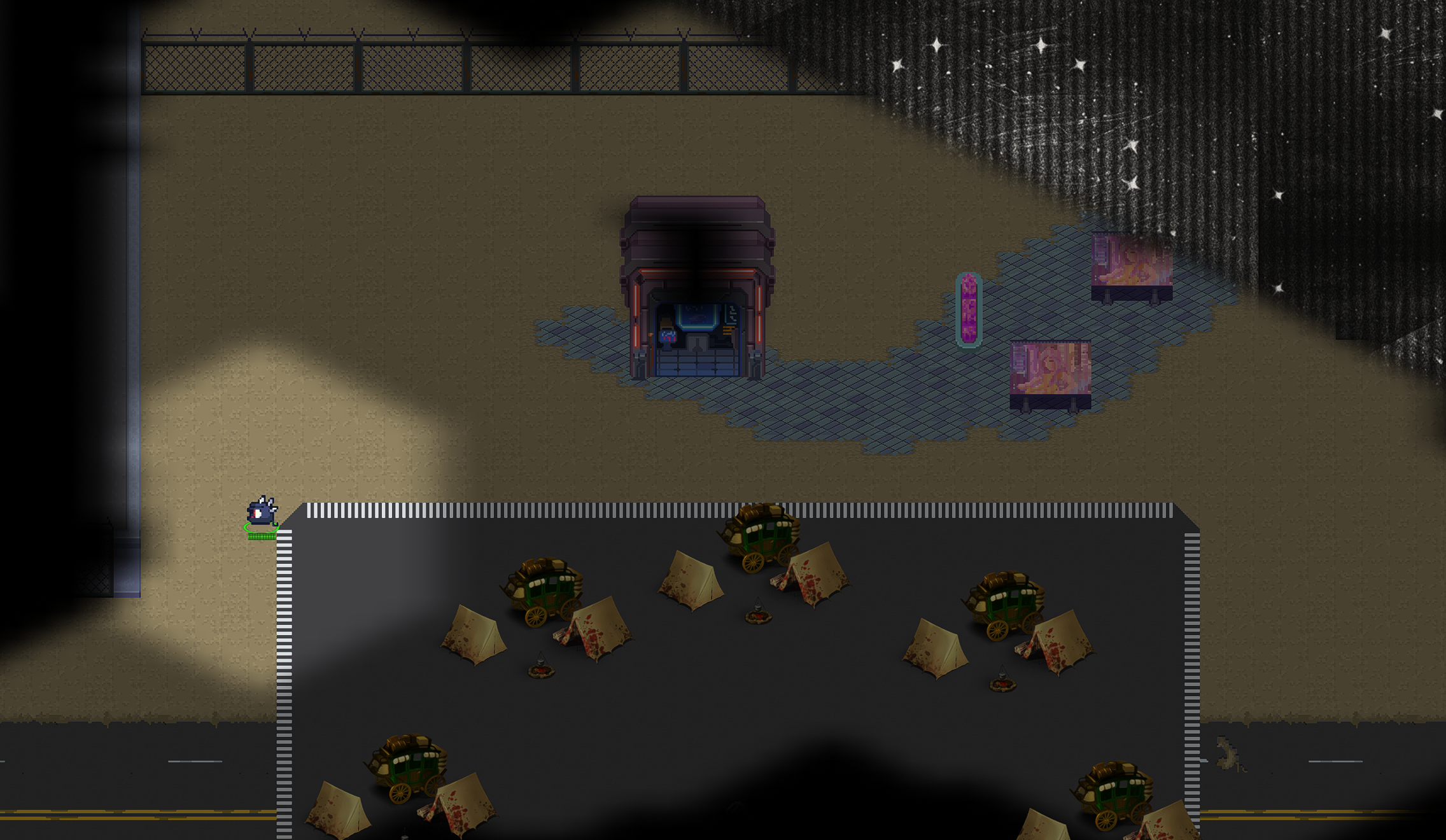

Fog version 1.

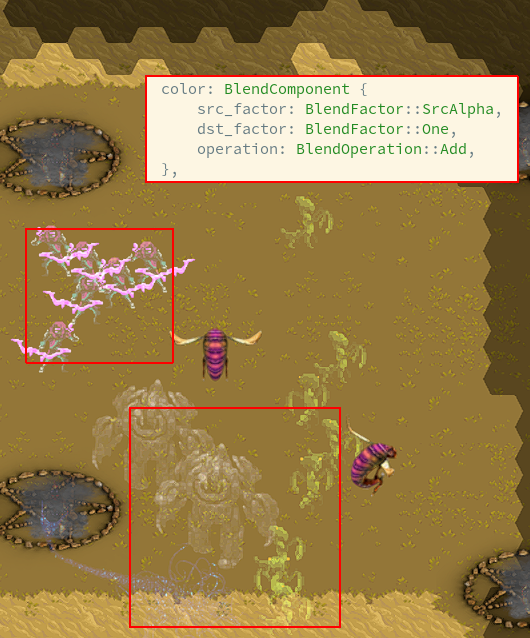

This is using BlendMode::Add (or Mod, I forget) to alleviate the issue of visible texture edges inside the fog.

It also uses a very noisy texture, rotating it randomly, all in the hopes of creating so much noise that fog should form.

I think it looks OK.

There is feedback that the fog is white, when it should be dark. However, with darker textures the approach just produces mud, it doesn’t appear like fog, more like smog, some issue that prevents clear vision.

So we switch to drawing plain dark hexagon textures. "Without" overlap. We necessarily need to draw with a little bit of overlap, because the texture cannot be pixel-perfectly tiling for all zoom levels.

I somewhat enjoy this textured effect. It does not really look like fog, but it looks interesting.

Vertices and Shapes

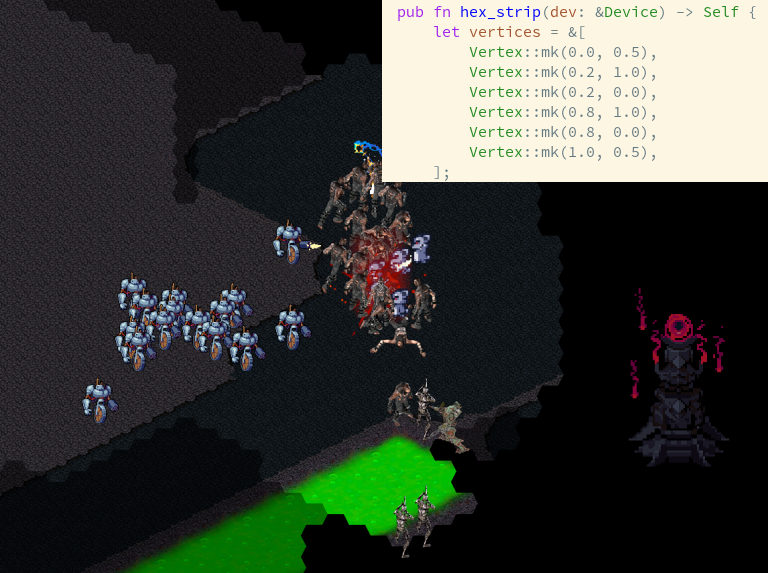

I start a project to re-implement the SDL2 renderer, on top of wgpu. The hope is to generally open venues for making the game look better, by having access to more low-level graphics APIs.

First of all this endeavour teaches me about vertices. It turns out that the "destination" of our texture copies is defined quite abstractly as a [0.0, 1.0] rectangle. Whenever we copy a texture onto an area of our drawing target, a 4x4 transformation matrix is computed and pushed to a GPU buffer.

Then in the "Vertex Shader" step, this matrix is applied to each of the abstract rectangle’s defining points (vertices), in order to find the concrete pixel area on screen, where we want to draw.

So we might just as well define an "abstract hexagon!" And tile it on the screen, producing seamless fog. No longer internally patterned.

Even though our hexagon is defined in [0.0,1.0] and converted to pixels in a sequence of floating-point operations, the result is indeed pixel-perfect with no overlaps nor holes, at any scale.

(N.B. this needs to use instanced drawing, otherwise it will be terribly slow. When drawing textures with SDL2, subsequent draws of the same texture are automatically batched into instanced drawing, that’s why it worked for us.)

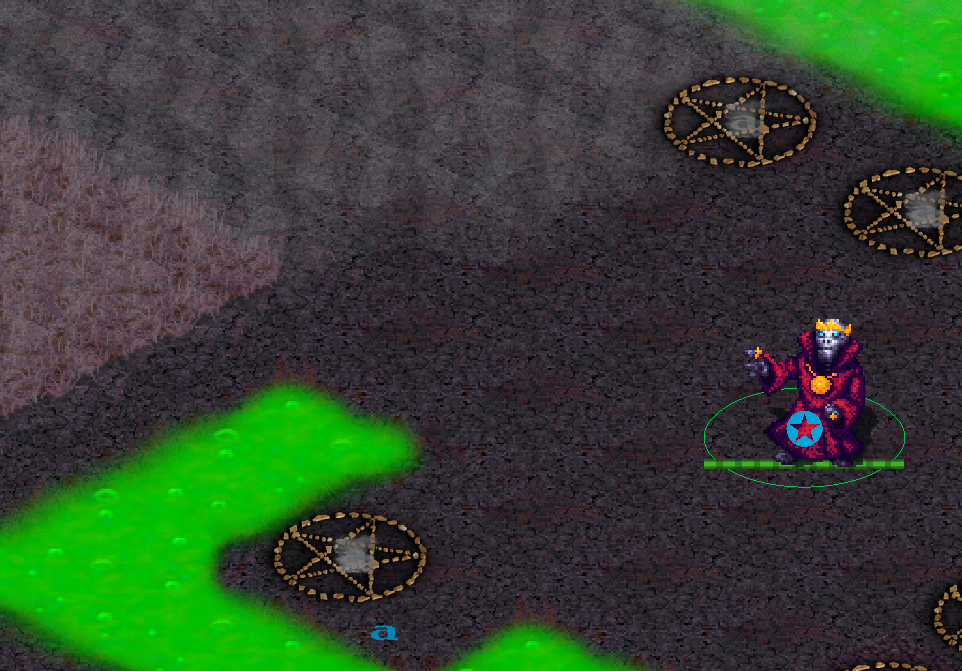

Fog now at version 3. While it conveys well what your units can and cannot see, I feel that this is still too technical. It gives away too much of the underlying system.

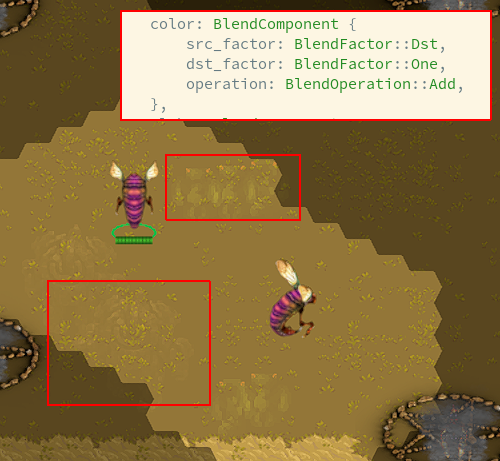

Blend Modes

Another thing I learn about are blend modes. The SDL2 API offers 3-4 different ones, but the underlying graphics API are far more flexible. We can form a new blend mode to represent cloaked units we have not yet revealed, leaving them barely visible:

For revealed cloaked units, the existing BlendMode::Add is quite good.

Fragments

Finally we are getting to fragment shaders.

It turns out a fragment shader is indeed called separately once for each destination pixel. What is called "uv" coordinates allow it to be independent of scale: UV represent "percent-wise" progress of the shape along the axis.

A rectangle that is a concrete 3px wide on the shader target, has three possible "uv.x" coordinates in the fragment shader:

- 0.5 / 3.0

- 1.5 / 3.0

- 2.5 / 3.0

(Depending on your graphics API, this may be 0.5 lower, for an effective range of (-0.5, 0.5) rather than (0, 1).)

Now finally we may define an "abstract smooth shape," by writing a fragment shader:

- fade_x = abs(uv.x - 0.5) * 2.0

- fade_y = abs(uv.y - 0.5) * 2.0

- intensity = 1.0 - (fade_x * fade_y)

Meaning we use 100% intensity in the exact middle, and fade out linearly to 0.0 along both axis. This can be painted to any size. If there are more pixels, the fade-out will have more steps. The exact middle pixel (if there is one) will always be at 100% intensity.

We can now tile this to rectangular targets on a staggered grid at (distance = half size) to get fade_in==fade_out on the insides; so that there is a stable intensity=1 everywhere in the fog, as well as a smooth fade-out to all edges.

But this is additive. The intensity adds up to 1 like (1+0), (0.5+0.5), (0.3+0.7). Meanwhile standard alpha blending is multiplicative. To "make an area 50% darker," you paint over with full black color at alpha=0.5. But doing this twice in a row, then, has a 0.5*0.5=0.25 effect. We can’t just set colour=black, alpha=intensity, and stack it up.

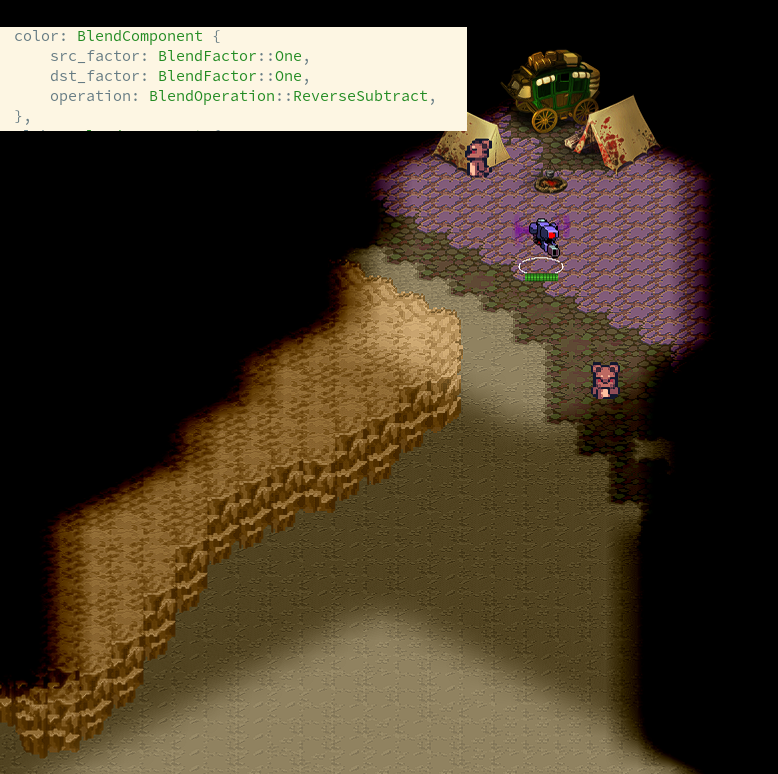

Fortunately we know about blend modes. The first, rather naive, approach is to make a "subtract" effect: We set colour=intensity and subtract this value from the destination.

To make unexplored areas pitch black and others only foggy, we use different base intensities, e.g. subtracting 1.0 for unexplored tiles, and only 0.1 per foggy tile, so it would take off 10% from pure white.

It is smooth. It distorts the colours. (Cool imho.) But unfortunately with this approach, if the base colour value of a terrain is low enough, it will turn pitch black even if it should be foggy. Because we are just subtracting. We need to get the multiplication working after all, but it needs to be after the overlapping.

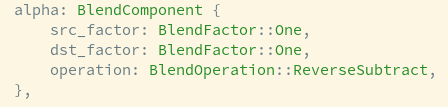

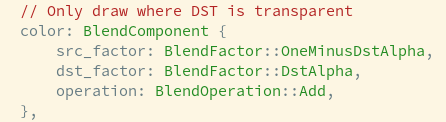

So one more hack. The interesting thing about alpha is that it’s essentially meaningless on a target. On a flat 2D texture, there is nothing "behind" the texture that would become visible by changing its alpha. So we abuse alpha as an accumulation channel for our intensity, while leaving the RGB channels completely untouched.

Then in a second step, throw black colour on the whole screen, but hitting each pixel only according to its inverse alpha value.

Voilà.

Closing

Clearly there are even "better" ways to build the fog. A single fragment shader may run over the entire screen, computing fog intensity for each pixel by looking up visibility of nearby tiles. The visibility grid of tiles could be held in a uniform variable. Instead of writing each foggy pixel thrice, such an approach would only write them once. It would also save thousands of vertex shader invocations.

I would also like to bring back the color-shedding "sepia" effect of the color-subtract solution. It’s another project, then.

Files

Get Hypercoven

Hypercoven

Real-Time Cacophony

| Status | Released |

| Author | Filmstars |

| Genre | Strategy, Survival |

| Tags | 2D, Isometric, mind-bending, Multiplayer, Real time strategy, Roguelite, Singleplayer, Tactical, Tower Defense |

| Languages | English |

More posts

- DD1000000Sep 05, 2025

- V130 – CLOUDY?Aug 28, 2025

- V129 - Cross Patch LeaderboardsAug 24, 2025

- Some Browser Troubles FixedAug 22, 2025

- V10000000 - 1.0!Aug 20, 2025

- V1111111 - RELEASE CANDIDATE 1Aug 07, 2025

- V126 - MISSION3SJul 31, 2025

- V125 - SPAWNER2Jul 09, 2025

- V124 - DD111111Jul 04, 2025

- V123 - INVA2IONJun 24, 2025

Leave a comment

Log in with itch.io to leave a comment.